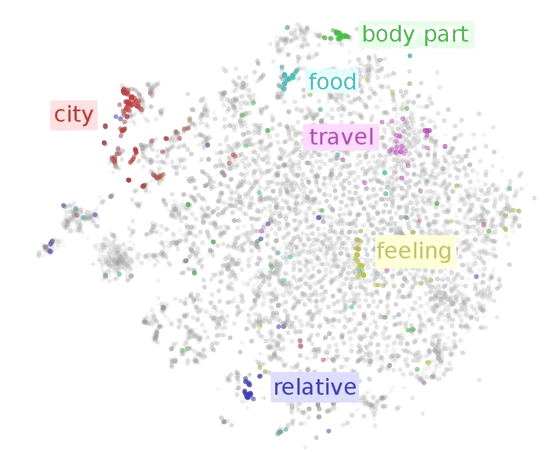

are dense, high-dimensional representations of words in a continuous vector space, where each word is mapped to a vector. These embeddings capture semantic relationships between words, such as similarity and analogy. For example, in a word embedding space, similar words like “cat” and “dog” are closer together, and relationships like “king - man + woman = queen” can be represented.

source: rude.io

source: rude.io

There are several types of word embeddings used in natural language processing, each with its own characteristics and strengths:

-

Count-based Embeddings:

-

Predictive Embeddings:

- Word2Vec: Learns distributed representations of words by predicting words in context using shallow neural networks.

- GloVe: Learns word vectors by factorizing the logarithm of the word co-occurrence matrix.

- FastText: Similar to Word2Vec but also considers character n-grams, enabling it to generate embeddings for out-of-vocabulary words.

-

Contextual Embeddings:

Each type of word embedding has its own advantages and is suitable for different tasks and scenarios in natural language processing.